|

| Does your data warehouse belong in the cloud? |

Are you interested in leveraging proprietary data that is already being collected by your organization or doing the same with readily available public data? Data science and business analytics should not merely be viewed as the latest buzzwords. But rather as a combination of formal disciplines in the arts and sciences, some possibly even traditional.

This article describes my thought process behind the choice of storage platform focusing on the Google Cloud Platform from a high level with respect to the volunteer work I performed for the JerseySTEM (a non-profit organization), where data from the State of New Jersey Board of Education had to be analyzed; primarily to meet the objectives of identifying under-served communities and efficiently allocating resources for Science Technology Engineering and Mathematics (STEM) educational initiatives.

This is not a technical "how to" document. There are already many other well written tutorials available on the internet so there is no real need to reinvent the wheel. However, documentation links have been included whenever they were referenced as part of my decision making process.

The Big picture

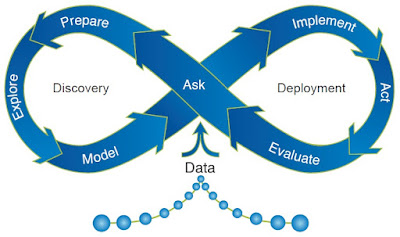

Although this article focuses on the planning and design of the data repository within a data analysis project, it is worthwhile noting where this fits with respect to the SAS Analytical Life Cycle (taken from the white paper on Data Mining From A to Z).

Considering cloud hosted services

Unless you were pulled into a project at the ground level, there would usually be a data repository already available in most situations. However specific to the JerseySTEM use case, although the external source data was previously established, there was no existing functional and working data repository already in place that was usable for performing the research and analysis that was required. As such, there was a need to build the database from scratch.

Being a non-profit organization project, it was extremely important to be sensitive to the requirement of being cost conscious. Even so, I did not want to compromise on the pre-requisites of having a robust, easily supportable and scalable model that outsourced cloud platforms provided - via Software as a Service (SaaS), Platform as a Service (PaaS) or Infrastructure as a Service (IaaS).

Hence, my first order of business was to consult with industry subject matter experts who had experimented with cloud technologies before as end users. I found that there existed a general consensus among them that cloud storage pricing was cheap to start with. But could potentially escalate if there was a future need for high bandwidth extraction of data due to egress charges; akin to the cost structures of back-end loaded mutual funds as an analogy.

That said, the biggest attraction to a cloud based data storage solution to me personally, was that it was lightweight in terms of support from the organizational angle, something extremely valuable to a non-profit organization since volunteers come and go.

Bearing in mind that every use case is different, we could control operating costs by:

- Limiting egress activity, in our case, to our internal team of data analysts and scientists. Public data could be published to other more cost efficient platforms for general consumption without turning on public access to the database.

- If need be, we could turn the database on/off (like the light switch in a room) whenever our data analysts needed to access the data, conditional upon an additional separate layer for public access as described in the previous point and given that the external sourced data is only updated annually.

Exploring different cloud storage types

Given our very specific requirements, I decided on a cloud based solution with Google since it was less cumbersome administratively as we were already using their email services. Furthermore, there was a requirement to convert a significant number of address locations from the school directory to GPS coordinates in an initial upload of school reference data into the database. Google was offering $300 in credits over a limited trial period, and the ability to reduce the amount of manual labor associated with free GPS look ups using the Google Maps Geocoding API albeit for a limited time sealed the deal.

At the end of the day, given that the data in question was highly structured and also not within the realm of big data (size wise), I chose a traditional MySQL Relational Database Management System (RDBMS) hosted on Google Cloud SQL. It didn't make sense purely from a data storage perspective to attempt to fit a square peg in a round hole by forcing the data into a NoSQL solution such as Google Cloud BigTable or Google Cloud Datastore. Despite spending some time considering and experimenting with NoSQL options simply due to their more affordable storage pricing model, it was still time well spent as they could be more efficiently and cost effectively deployed when the need arose for more complex data analyses in the future.

Data repository design

I spent years working as a front office desk developer in financial services. Those familiar with the industry would know that it basically entails direct interactions with traders and salespeople, involving high impact deliverables with time critical turnarounds. Having come from this background, I realized early on in my career, the importance of designing and building easily supportable and highly configurable applications. By applying similar design principles within the world of database schema design, this meant keeping the schema as flexible as possible with the ability to scale not just in terms of size but also functionality.

Extract, Transform and Load

Before we could proceed with any form of analysis, the data had to be extracted, transformed and loaded into a database, as is commonly known as the Extract, Transform and Load (ETL) process within the data industry.With the education data, the majority of it sat in Excel spreadsheets and Comma Separated Value (CSV) files, mostly as some form of pivot table segregated by worksheets or files per academic year. All this had to be transformed into a format that would fit data uploads into a normalized RDBMS table, where data quality would then have to be validated and finally retrieved as Structured Query Language (SQL) queries formulated for analysis in the decision making process.

Data quality is paramount

As most data professionals are probably familiar with, the ETL process is only a subset of the 11 data management knowledge areas (see the Guide Knowledge Area Wheel) as stipulated in the The Data Management Association (DAMA) Data Management Body of Knowledge (DMBOK). But this topic is beyond the scope of this article. Interested readers can refer to the DMBOK for a deeper understanding of data management best practices. For now, it would suffice to say that data quality is paramount and reasonable efforts should be dedicated to any data science and analytics project to avoid the dreadful situation of "Garbage In Garbage Out"!

Conclusion

In summary, anybody planning on building a data warehouse from scratch should consider all options, from building and supporting it in house to vendor outsourcing with a cloud service provider. Be familiar with and aware of the different pricing packages available and the costs (both in terms of time and monetary investments) associated with each option, always factoring that in your final decision.

This post was first published on LinkedIn.